System Overview

A brief overview of the portfolio system

1.0 Overview

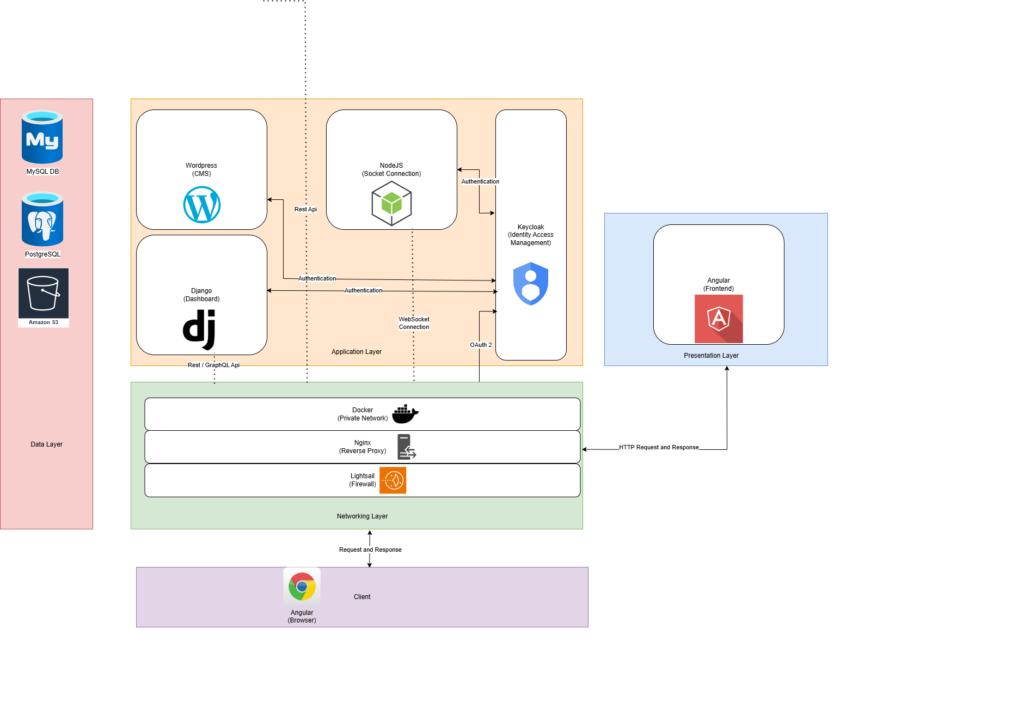

Welcome back! In my previous article here, we delved into the infrastructure and automated deployment process that lays the foundation for my portfolio system. Think of it as setting the stage for a grand performance. Now, it’s time to raise the curtain and reveal the stars of the show – the applications themselves! To make this exploration manageable and engaging, I’ve organized the system into four distinct layers, each with its own unique role:

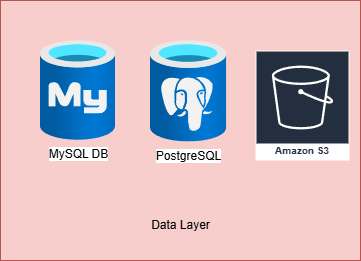

1. The Data Layer: This is where the vital information resides, the heart of our system. It houses both custom and third-party applications that act as secure vaults for our data. Think MySQL for WordPress, PostgreSQL for other applications, and so on.

2. The Application Layer: This layer is where the magic happens! It’s home to the applications that access, transform, and manage the data. Imagine WordPress diligently serving up blog posts, a Node.js application facilitating real-time communication, and Keycloak acting as the vigilant guardian of access control.

3. The Presentation Layer: This is the face of our system, the captivating interface that greets our users. Currently, it features a sleek Angular application, but exciting additions like Flutter-based mobile apps are on the horizon!

4. The Networking Layer: This layer ensures smooth communication and efficient traffic flow. It’s the intricate network of pathways connecting our applications, with Docker containers acting as individual hubs, all linked by the Docker network.

Now, I know what you’re thinking – diving deep into every component might feel like a marathon. Fear not! This article provides a scenic overview of the landscape, highlighting the key landmarks and their interactions. Later, we’ll embark on dedicated expeditions to explore each component in detail. So, buckle up and enjoy the journey!

Note: I have not created all the components yet. This portfolio is a work in progress and I am actively working on it. Therefore, I will provide GitHub link to all the applications that are currently available. I will keep updating the list here as the number of applications increase.

2.0 Layers and its components

The diagram above offers a bird’s-eye view of our system architecture – a snapshot of its current form. Of course, like any dynamic creation, it’s bound to evolve and adapt over time. Rest assured, I’ll keep this visual guide updated as the system grows and matures.

As you explore the diagram, you’ll notice the distinct layers and their respective components, each playing a crucial role in the overall symphony. The arrows illustrate the various requests a client might make, tracing the journey of data as it flows through the system.

Now, I must confess – for the sake of clarity, I’ve omitted one vital element from this architectural overview: logging. In my experience, logging is like a trusty compass, guiding you through the complexities of a growing system. Believe me, I’ve lost myself in the depths of production debugging, spending hours searching for the source of an issue. With proper logging, those hours can shrink to mere minutes or even seconds! But fear not, logging will have its own dedicated article where we can delve into its importance and intricacies.

In this section, we’ll take a closer look at each layer, examining its components and the rationale behind my technology choices. Get ready for a fascinating journey through the inner workings of the portfolio system!

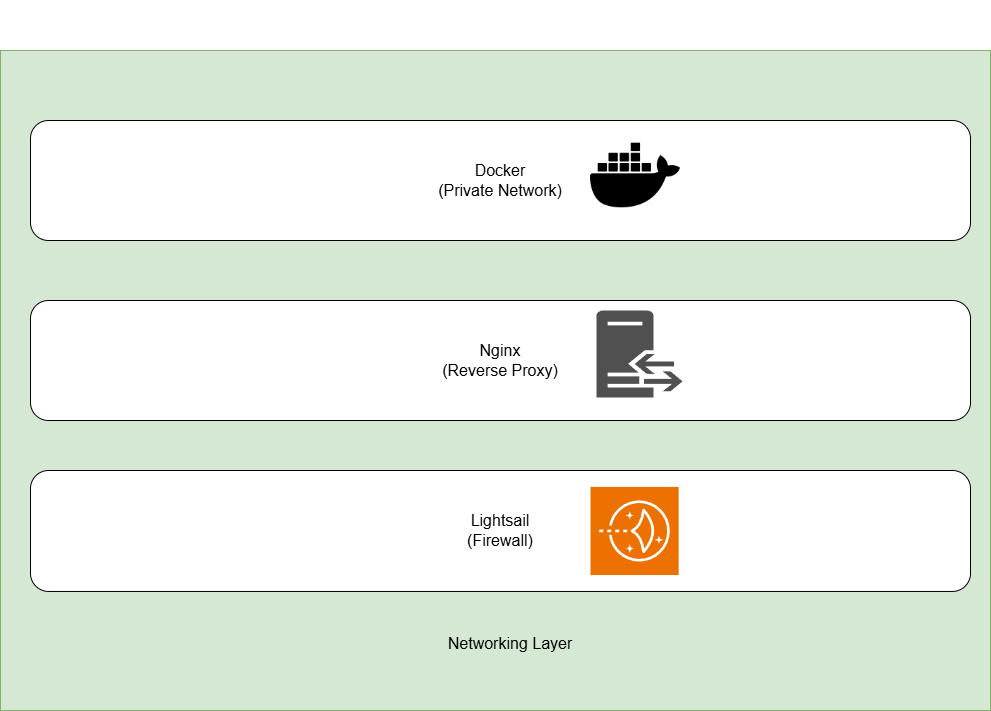

2.1 The Networking Layer

Welcome to the front lines! The networking layer acts as the vigilant gatekeeper of our system, the first point of contact for every incoming request. It’s a critical zone where we establish a robust security perimeter, ensuring only authorized traffic proceeds further.

Rather than reinventing the wheel, I’ve enlisted the help of several open-source allies, each playing a specific role in this carefully orchestrated sequence. Think of it as a series of checkpoints, each request passing through one layer before reaching the next. Here’s a closer look at our security personnel:

1. AWS Lightsail Firewall: Our applications reside within the comfy confines of AWS Lightsail instances, which come equipped with a handy built-in firewall. Embracing the principle of least privilege, I can meticulously control access to specific ports and endpoints, ensuring only the necessary doors are open. While Terraform’s support for managing these rules is still evolving, I’m happy to take the reins with a bit of manual configuration.

2. Nginx Reverse Proxy: Ah, Nginx, my trusty companion! This versatile tool excels not only as a web server but also as a masterful traffic conductor. Over the years, it’s become my go-to reverse proxy, thanks to its user-friendly configuration, open-source nature, and vibrant community support. Nginx stands ready to greet every incoming request (if permitted by Lightsail, of course), carefully determining its legitimacy, destination, and communication protocol. It’s also a prime location for logging all traffic, providing valuable insights into our system’s activity. Each public-facing application enjoys its own domain (or subdomain), a dedicated Nginx configuration file, and a secure SSL certificate. Nginx expertly maps authenticated requests to their appropriate routes, ensuring smooth and efficient delivery. And the best part? This is just the beginning! I have ambitious plans to introduce load balancers in the future, further enhancing our system’s resilience and scalability.

3. The Docker Network: Our backend applications reside in their own private Docker containers, like individual apartments within a larger complex. This isolation not only prevents conflicts but also simplifies deployment and promotes modularity. But don’t worry, these applications aren’t solitary creatures! They constantly communicate with each other through an external Docker network, a bustling hub of activity. This approach allows me to deploy applications independently, confident that their dependencies won’t interfere with one another. Furthermore, it paves the way for a seamless transition to Kubernetes in the future, should the need arise. Nginx acts as the concierge, directing requests to the appropriate host within the Docker network. The applications then diligently process these requests and send their responses back along the same path.

These are the key players in our current networking setup, providing a solid foundation for our system. But remember, this is just the beginning! As our needs evolve, we’ll likely upgrade or replace these components with more modern technologies. The beauty of our modular design is that these changes can occur without disrupting the other layers. It’s a testament to the power of flexibility and adaptability!

2.2 The Presentation Layer

Now, let’s step into the spotlight and explore the presentation layer – the captivating realm where users interact with our system. Currently, this layer is elegantly simple, featuring a single star performer: our Angular application.

Why Angular, you ask? Well, let’s just say we have a fantastic rapport! I’ve always admired its elegant structure, particularly the concepts of services, components, and their seamless data flow. It aligns perfectly with my preferred backend technologies, creating a harmonious symphony of code.

This Angular application is the face of our system, the welcoming gateway for all users. Rest assured, every file it hosts is carefully guarded by our robust networking layer. From the user’s browser, the application communicates with backend services, traversing securely through the network.

On the security front, I’ve implemented routes and AuthGuards within the Angular application itself, adding an extra layer of protection. However, the golden rule of frontend development remains paramount: never expose sensitive information in the client-side code. Our Angular application diligently authenticates with our IAM service before accessing any private backend endpoints.

Looking ahead, I envision a future where Flutter-powered mobile applications join the stage. This will extend our system’s reach, allowing users to seamlessly interact with it from their mobile devices. Imagine the possibilities!

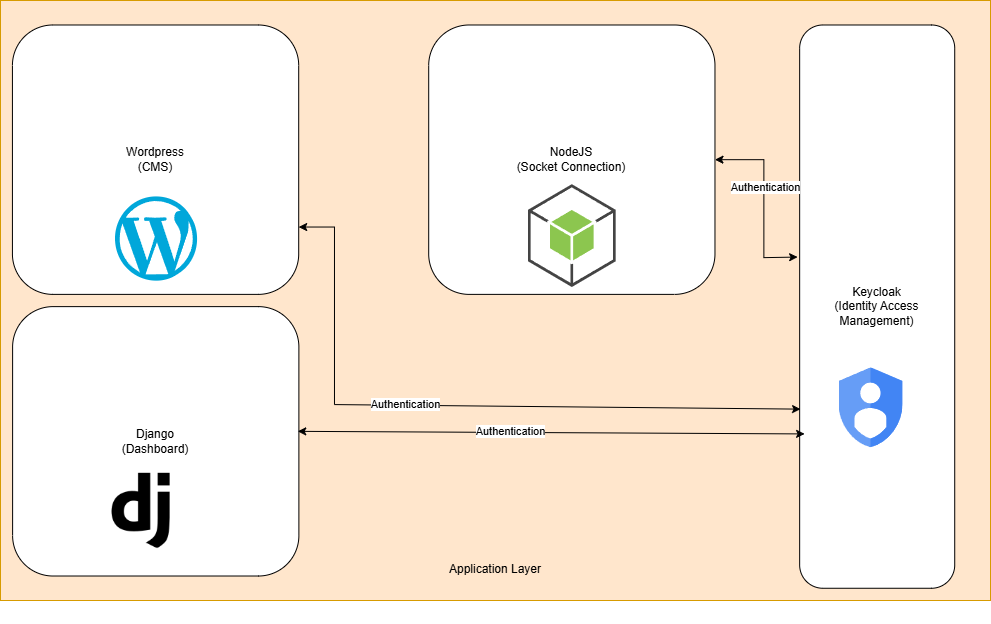

2.3 The Application Layer

Now, let’s journey into the engine room of our system – the application layer! This is where the real magic happens, where data is transformed, logic is executed, and the system’s true potential is unleashed. Each application in this layer is an independent service, communicating with the client application through a variety of protocols like HTTP, WebSockets, and GraphQL. While these services can also chat amongst themselves, I haven’t implemented inter-service communication just yet. But don’t worry, we’ll explore those plans soon!

As our system evolves, this layer is likely to become a bustling hub of activity, with new applications joining the ensemble. To maintain order and clarity, I’ve decided to categorize these applications into two distinct groups:

1. Public Applications: These are the extroverts of the application layer, boasting public-facing APIs that allow interaction with the outside world. Our frontend applications primarily access these APIs using familiar protocols like HTTP and WebSockets. But it’s not just an exclusive club for internal applications! External third-party applications can also join the party, accessing these ReST endpoints. Currently, WordPress is diligently serving as our CMS, and I’m in the process of integrating Keycloak as our steadfast Identity Access Management solution. But the excitement doesn’t stop there – Django and Node.js are waiting in the wings, ready to contribute their unique talents.

2. Private Applications: These are the introverts, content to work behind the scenes without any public endpoints. They prefer to communicate with other applications within the system, typically backend services. While this group is currently empty, I anticipate a CRON scheduler will soon take on this role, diligently performing scheduled tasks. For inter-service communication, I’ve chosen the ever-reliable RabbitMQ. Its simplicity, open-source nature, and compatibility with various modern languages make it the perfect choice for crafting an event-driven architecture.

With this dynamic duo of public and private applications, our system is poised for growth and flexibility. The application layer is truly the heart of our system, pumping data and logic throughout its veins. And as we continue to develop and expand, this layer will undoubtedly become an even more vibrant and essential part of the overall architecture.

Our application layer is a vibrant ecosystem of specialized services, each playing a crucial role in delivering a rich and engaging user experience. Here’s a closer look at the key players that currently grace this stage, or will soon make their grand entrance:

1. WordPress CMS: For crafting compelling blog posts and documentation (like the very article you’re reading!), WordPress is my trusted companion. Its rich CMS, enhanced by the visual editor Elementor, provides an intuitive and powerful platform for content creation. However, I prefer to keep my frontend code sleek and modern, leveraging the strengths of frameworks like Angular. That’s why I’ve embraced WordPress’s flexible REST API to seamlessly fetch and display posts and articles. This approach not only separates concerns but also allows me to customize and extend the API endpoints to perfectly suit my needs.

2. Node.js Streaming Application: When it comes to real-time streaming and WebSockets, the asynchronous prowess of JavaScript and Node.js is undeniable. This application will harness the power of Node.js to showcase my expertise in WebSockets and WebRTC, delivering dynamic and interactive streaming features. Get ready for an immersive experience!

3. Django Dashboard: To demonstrate my passion for machine learning and artificial intelligence, I’ve enlisted the help of Python – the undisputed king of this domain. By analyzing system data and metrics, I can showcase the power of machine learning to extract valuable insights. Django, a battle-tested and full-featured web framework, provides the perfect foundation for building a robust and scalable dashboard. But it won’t stop there! This Django application will also evolve into the backoffice of my system, managing critical administrative functions.

4. Keycloak IAM: Security is paramount in any web application, and that’s where Keycloak takes center stage. This open-source Identity Access Management (IAM) solution from Red Hat provides a comprehensive suite of tools for authentication and authorization. With Keycloak, I can effortlessly implement various access control mechanisms like RBAC, PBAC, and ABAC, ensuring that only authorized users can access specific resources. It also boasts a plethora of advanced authentication features, including OAuth2 provider, two-factor authentication, and JWT authentication. But the true beauty of Keycloak lies in its customizability, allowing me to tailor it precisely to my needs. By offloading the complexities of authentication and authorization to Keycloak, I can focus on designing a robust and secure system.

5. The .Net Scheduler: While not depicted in the system diagram, a robust scheduler is essential for automating background tasks. My plan is to develop a CRON scheduler using .NET Core, a powerful and versatile framework that has recently embraced the open-source world. To be honest, the implementation of this scheduler is more about .Net than the scheduler itself. ASP.Net has quite recently introduced itself to the open-source community with the release of .Net Core. I am a fan of .Net technology when it comes to static typed languages and working with a framework backed by an entire enterprise grade ecosystem. However, Microsoft is expensive. Therefore, this project allows me to explore the capabilities of .NET Core in a Linux environment, integrating it seamlessly with other open-source technologies. It’s an exciting opportunity to test whether enterprise-grade .NET applications can be built and deployed without relying solely on the Microsoft ecosystem.

2.4 The Data Layer

Now, let’s descend into the bedrock of our system – the data layer! This is where we encounter the raw essence of information, the foundation upon which our entire digital world is built. At its core, digital data is simply a collection of binary digits, meticulously arranged to represent something meaningful. But what truly distinguishes one piece of data from another is how it’s structured, how it relates to other data, and how it’s stored for optimal retrieval and manipulation.

When it comes to data and databases, I like to categorize information into three distinct types:

1. Structured Data: This is the well-organized data that fits neatly into rows and columns, like soldiers in formation. It’s easily searchable and analyzable, making it ideal for traditional relational databases. Think of customer records, financial transactions, or product inventories. Some example of such data are user data, role and permission related data, posts and their categories, etc.

2. Unstructured Data: This is the wild and free-form data that resists rigid structures. It encompasses everything from text documents and emails to images, audio files, and videos. While it can be more challenging to manage and analyze, it holds a wealth of potential insights. Some of the examples of such data are logs, comments, and system metrics.

3. Blobs: These are the large chunks of binary data, often representing multimedia files or complex objects. They require specialized storage and handling techniques to ensure efficient access and retrieval.

I once found myself in a meeting with senior developers, embroiled in a heated debate about the age-old question: SQL or NoSQL? As we planned the architecture for a new system, the room divided into two camps – the frontend developers, champions of NoSQL databases like MongoDB and DynamoDB, and the backend developers, staunch supporters of SQL stalwarts like PostgreSQL and Oracle.

The frontend developers, accustomed to the flexibility of NoSQL in their MERN stacks, argued for its agility and scalability. Meanwhile, the backend developers, comfortable with the relational structure and ACID properties of SQL, advocated for its reliability and consistency.

Observing this passionate exchange, I realized the futility of confining ourselves to a single database paradigm. Why limit ourselves when we can leverage the strengths of both? My approach is to embrace the diversity of data, categorizing it into structured, unstructured, and blobs, and then selecting the most suitable database for each type. This allows us to tailor our data storage strategy to the specific needs of each application, rather than forcing the data to conform to a single database model.

Of course, this approach introduces the challenge of synchronizing data across different databases. But fear not! This is where the elegance of event-driven architecture and CQRS patterns shines. CQRS (Command Query Responsibility Segregation) is not just about separating read and write operations; it’s about representing a single piece of information in different ways to optimize both retrieval and storage. For instance, one service might store a specific data (like an order information) in a relational database for complex queries and joins, while another might utilize a vector database like Weaviate to store the same data for efficient similarity searches and recommendations.

By combining CQRS with an event-driven architecture, we can ensure data consistency across our diverse database landscape. When a data change occurs, an event is published, triggering updates in all subscribed services, regardless of their underlying database technology.

Therefore, I haven’t restricted my portfolio system to a single database technology. Instead, I’ve curated a collection of SQL, NoSQL, and file storage databases, each chosen for its unique strengths and suitability for specific applications. This approach allows me to optimize data storage and retrieval, ensuring my system remains flexible, scalable, and performant.

My portfolio system currently relies on three primary databases, each serving a distinct purpose. However, as the system expands and new applications emerge, I anticipate welcoming other members to this data storage family, such as MongoDB, Solr, and Elasticsearch. Here’s a closer look at the current lineup:

1. MySQL: This reliable workhorse is dedicated to powering the WordPress CMS. While WordPress currently favors MySQL over PostgreSQL, I remain open to migrating to PostgreSQL should compatibility blossom in the future.

2. PostgreSQL: This robust relational database is the cornerstone for managing structured data. It provides a solid foundation for storing and querying relational data across various applications, enabling efficient joins and complex queries.

3. AWS S3: For handling blobs like images and files, AWS S3 is an ideal choice. Its cost-effectiveness, scalability, and diverse storage tiers (Glacier, Standard, and Intelligent-Tiering) offer the perfect balance of performance and affordability. I can easily select the appropriate storage class based on the type of data and its access frequency.

This diverse collection of databases ensures that each application has access to the most suitable storage solution for its specific needs. As the system evolves, I’ll continue to evaluate and incorporate new database technologies, ensuring that my data management strategy remains flexible, scalable, and optimized for performance.

3. Conclusion

And there you have it – a comprehensive overview of my portfolio system’s architecture! We’ve journeyed through each layer, from the vigilant networking layer to the dynamic application layer, the captivating presentation layer, and the foundational data layer. I will dedicate one or more posts to each of the components in the layer, diving deep into the nitty-gritty detail of each component.

This system, much like a living organism, is constantly evolving. New technologies will emerge, requirements will shift, and the architecture will adapt. But the core principles of modularity, scalability, and maintainability will remain steadfast.

By embracing a diverse range of technologies and thoughtfully selecting the best tools for each task, I’ve created a system that is not only robust and performant but also flexible and adaptable. This approach empowers me to experiment with new ideas, seamlessly integrate emerging technologies, and continuously refine the system to meet evolving needs. The purpose of this system is not to build the simplest application but to provide me a platform for experimenting with new technologies and showcase my skills. Therefore, I have chosen an architecture which is not simple but scalable and modular.

I hope this exploration has provided valuable insights into my architectural decisions and inspired you to embark on your own architectural adventures. Remember, the most effective architecture is not always the most complex; it’s the one that best aligns with your goals, resources, and vision.

So, go forth and build amazing things! The world is your playground, and the possibilities are limitless.

4. References

- 1. https://github.com/zuhairmhtb/ThePortfolioInfrastructure

- 2. https://github.com/zuhairmhtb/ThePortfolioCMS

- 3. https://github.com/zuhairmhtb/ThePortfolioFrontend

- 4. https://angular.dev/overview

- 5. https://docs.aws.amazon.com/lightsail/

- 6. https://nginx.org/en/docs/

- 7. https://docs.docker.com/

- 8. https://developer.wordpress.org/rest-api/

- 9. https://www.keycloak.org/documentation

- 10. https://nodejs.org/docs/latest/api/

- 11. https://docs.djangoproject.com/en/5.1/

- 12. https://dev.mysql.com/doc/

- 13. https://www.postgresql.org/docs/

- 14. https://docs.aws.amazon.com/AmazonS3/latest/userguide/storage-class-intro.html

- 15. https://learn.microsoft.com/en-us/azure/architecture/patterns/cqrs

- 16. https://www.confluent.io/learn/event-driven-architecture/